2022.2.15 15:00~16:30 Alexey Dosovitskiy, Transformers for Computer Vision

A very nice blog post, https://iaml-it.github.io/posts/2021-04-28-transformers-in-vision/

- Kolesnikov et al, Big Transfer (BiT): General Visual Representation Learning, ECCV 2020

- Barbu et al, ObjectNet: A large-scale bias-controlled dataset for pushing the limits of object recognition models, NIPS 2019

- Dosovitskiy et al, An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale, ICLR 2021

- Zhai et al, Scaling Vision Transformers, 2021

- Liu et al, Swin Transformer: Hierarchical Vision Transformer using Shifted Windows, ICCV 2021

- Tolstikhin et al, MLP-Mixer: An all-MLP Architecture for Vision, 2021

- Ranftl et al, Vision Transformers for Dense Prediction, ICCV 2021

- Arnab et al, ViViT: A Video Vision Transformer, ICCV 2021

- Carion et al, End-to-End Object Detection with Transformers, ECCV 2020

- Zhao et al, Point Transformer, ICCV 2021

- Sajjadi et al, Scene Representation Transformer: Geometry-Free Novel View Synthesis Through Set-Latent Scene Representations, arXiv 2021

- Caron et al, Emerging Properties in Self-Supervised Vision Transformers, ICCV 2021

- Bao et al, BEiT: BERT Pre-Training of Image Transformers, arXiv 2021

- Kaiming He et al, Masked Autoencoders Are Scalable Vision Learners, arXiv 2021

- Radford et al, Learning Transferable Visual Models From Natural Language Supervision, ICML 2021

- Akbari et al, VATT: Transformers for Multimodal Self-Supervised Learning from Raw Video, Audio and Text, NIPS 2021

2022.2.15 16:30~18:00 Ivan Laptev

Visual Representations from Videos

– Miech et al, End-to-End Learning of Visual Representations from Uncurated Instructional Videos, CVPR 2020 Oral

– Miech et al, HowTo100M: Learning a Text-Video Embedding by Watching Hundred Million Narrated Video Clips, ICCV 2019

Video Question Answering

– Yang et al, Just Ask: Learning to Answer Questions from Millions of Narrated Videos, ICCV 2021

– HowToVQA69M Datasets

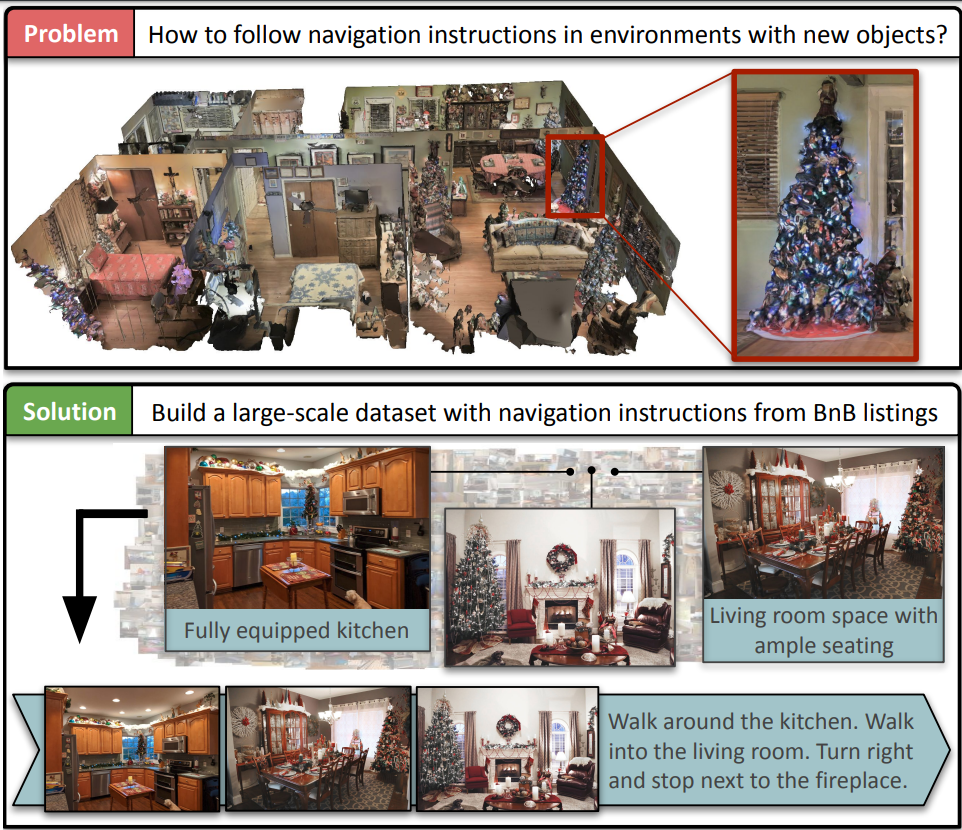

Vision-and-Language Navigation (VLN)

– Guhur et al, Airbert: In-domain Pretraining for Vision-and-Language Navigation, ICCV 2021

– Chen et al, History Aware Multimodal Transformer for Vision-and-Language Navigation, NIPS 2021

Efficient transformers

– Miech et al, Thinking Fast and Slow: Efficient Text-to-Visual Retrieval with Transformers, CVPR 2021

– El-Nouby et al, XCiT: Cross-Covariance Image Transformers, NIPS 2021

2022.2.16 13:30~15:00 Jun-Yan Zhu, Human-in-the-loop Model Creation

Their Works

– Isola et al, Image-to-image translation with conditional adversarial networks, CVPR 2017

– Zhu et al, Unpaired image-to-image translation using cycle-consistent adversarial networks, ICCV 2017

– Park et al, GauGAN, SIGGRAPH RealTime Live 2019

– Park et al, Swapping Autoencoder For Deep Image Manipulation, NIPS 2020

Data Augmentation for GANs

– Zhao et al, Differentiable Augmentation for Data Efficient GAN Training, NIPS 2020

– Karras et al, Training Generative Adversarial Networks with Limited Data, NIPS 2020

– Tran et al, On Data Augmentation for GAN Training, IEEE TIP 2020

– Zhao et al, Image Augmentations for GAN Training, arXiv 2020

Customizing a GAN with sketches

– Wang et al, Sketch Your Own GAN, ICCV 2021

– Bau et al, Rewriting a Deep Generative Model, ECCV 2020

2022.2.16 16:30~18:00 Armand Joulin, Advances in Self-supervised Learning

DINO

Caron et al, Emerging Properties in Self-Supervised Vision Transformers, 2021

Vision Transformer

+ Add Exponential Moving Average

+ Simplifying Normalization

+ Centering

+ Sharpening

+ Multi-crop

DINO + ViT : excellent K-NN performance

Application to copy detection

Applications: style transfer

Tumanyan et al, Splicing ViT Features for Semantic Appearance Transfer, 2022

Applications: part discovery

Amir et al, Deep ViT Features as Dense Visual Descriptors, 2022