- 2020 한국인공지능학회 동계강좌 정리 – 1. 고려대 주재걸 교수님, Human in the loop

- 2020 한국인공지능학회 동계강좌 정리 – 2. 서울대 김건희 교수님, Pretrained Language Model

- 2020 한국인공지능학회 동계강좌 정리 – 3. KAIST 문일철 교수님, Explicit Deep Generative Model

- 2020 한국인공지능학회 동계강좌 정리 – 4. KAIST 신진우 교수님, Adversarial Robustness of DNN

- 2020 한국인공지능학회 동계강좌 정리 – 5. AITrics 이주호 박사님, Set-input Neural Networks and Amortized Clustering

- 2020 한국인공지능학회 동계강좌 정리 – 6. KAIST 양은호 교수님, Deep Generative Models

- 2020 한국인공지능학회 동계강좌 정리 – 7. AITrics 김세훈 박사님, Meta Learning for Few-shot Classification

- 2020 한국인공지능학회 동계강좌 정리 – 8. UNIST 임성빈 교수님, Automated Machine Learning for Visual Domain

- 2020 한국인공지능학회 동계강좌 정리 – 9. 연세대 황승원 교수님, Knowledge in Neural NLP

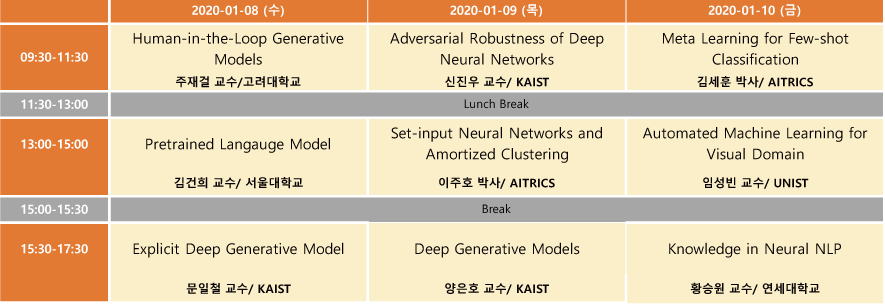

2020 인공지능학회 동계강좌를 신청하여 2020.1.8 ~ 1.10 3일 동안 다녀왔다. 총 9분의 연사가 나오셨는데, 프로그램 일정은 다음과 같다.

전체를 묶어서 하나의 포스트로 작성하려고 했는데, 주제마다 내용이 꽤 많을거 같아, 한 강좌씩 시리즈로 묶어서 작성하게 되었다. 여덟 번째 포스트에서는 UNIST 임성빈 교수님의 “Automated Machine Learning for Visual Domain” 강연 내용을 다룬다.

- Introduction

- kakao brain on Competitions

- Data Science Bawl 2018 [Link]

- Youtube 8m Challenge [Link]

- GQA Challenge 2019 [Link]

- BEA 2019 GEC Challenge [Link]

- NeurIPS 2019 AutoCV Challenge [Link]

- Medical Segmentation Decathlon [Link]

- Multi-task learning

- Characteristics

- 3D-image

- small amount of data

- unbalanced labels

- large-range object scales (small object ~ large object)

- multi-class labels

- Multi-task learning + knowledge transfer

- From known tasks, ex) liver, brain, hippocampus, …

- To unknown tasks, ex) colon cancer, hepatic vessels, …

- Characteristics

- Baseline : 3D U-Net

- Kayalibay et al, CNN-based Segmentation of Medical Imaging Data, 2017

- Multi-task learning

- kakao brain on Competitions

- NAS

- Introduction

- Definition

- The process of automating architecture engineering

- NAS can be seen as subfield of AutoML

- significant overlap with :

- Hyperparameter optimization

- Meta learning

- Literature

- AutoML.org Freiburg [Link]

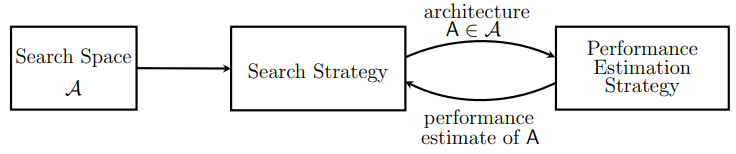

- Categorizing NAS

- Elsken et al, Neural Architecture Search: A Survey, 2019

- Elsken et al, Neural Architecture Search: A Survey, 2019

- Definition

- Search Space

- Soft Start

- Chain-structured Neural Nets

- Multi-branch Neural Nets

- Network Structure Code

- Zhong et al, BlockQNN: Efficient Block-wise Neural Network Architecture Generation, CVPR 2018

- CNN Design

- Pham et al, Efficient Neural Architecture Search via Parameter Sharing, ICML 2018

- Perez-Rua et al, Efficient Progressive Neural Architecture Search, BMVC 2018

- Cell Design

- Zoph et al, Learning Transferable Architectures for Scalable Image Recognition, CVPR 2018

- Liu et al, Progressive Neural Architecture Search, ECCV 2018

- Soft Start

- Search Strategy

- Reinforcement Learning

- Model the layer selection process as a MDP

- Baker et al, Designing Neural Network Architectures using Reinforcement Learning, ICLR 2017

- Zoph, Neural Architecture Search with Reinforcement Learning, ICLR 2018

- Evolution method

- Zoph et al, Learning Transferable Architectures for Scalable Image Recognition, CVPR 2018

- AmoebaNet

- Real et al, Regularized Evolution for Image Classifier Architecture Search, AAAI 2019

- SOTA at ImageNet classification

- Liu et al, Progressive Neural Architecture Search, ECCV 2018

- Gradient Based

- Hyperparameter optimization

- Shin et al, DIFFERENTIABLE NEURAL NETWORK ARCHITECTURE SEARCH, ICLR 2018 Workshop

- Architecture selection

- Saxena & Verbeek, Convolutional Neural Fabrics, NIPS 2016

- Connectivity selection

- Ahmed & Torresani, MaskConnect: Connectivity Learning by Gradient Descent, ECCV 2018

- Micro-search strategy

- Liu et al, DARTS: Differentiable Architecture Search, ICLR 2019

- Optimization

\min_{\alpha} L_{val} ( w^*(\alpha), \alpha )

s.t. \quad w^*(\alpha) = argmin_w \quad L_{train} (w, \alpha) - => inner loop : minimize w*

=> outer loop : minimize \alpha^* - DARTS needs Fine-tuning

- Optimization

- Liu et al, DARTS: Differentiable Architecture Search, ICLR 2019

- Softmax annealing

- Xie et al, SNAS: Stochastic Neural Architecture Search, ICLR 2019

- Softmax annealing

\bar{o}^{(i, j)}(x) = \sum_{o \in O} \dfrac{exp(\alpha^{(i,j)}_o)}{\sum_{o' \in O} exp(\alpha^{(i,j)}_{o'})}o(x) \rightarrow \sum_{o \in O} \dfrac{exp(\alpha^{(i,j)}_o / \tau)}{\sum_{o' \in O} exp(\alpha^{(i,j)}_{o'} / \tau)}o(x) - Gumbel noise

- Softmax annealing

- Xie et al, SNAS: Stochastic Neural Architecture Search, ICLR 2019

- Hyperparameter optimization

- Reinforcement Learning

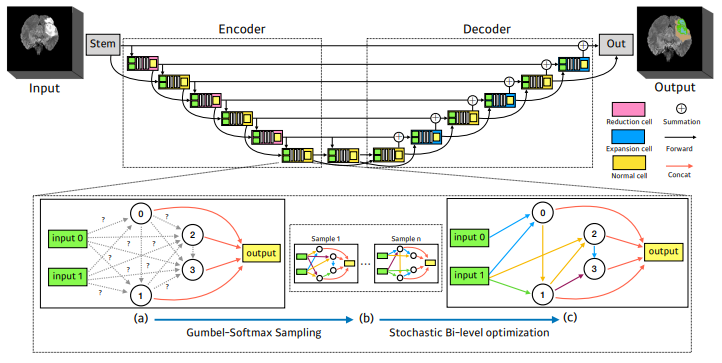

- Scalable NAS

- Applications to 3D Medical Images Segmentation

- Baseline : 3D U-Net

- Problem

- DARTS on 3D images

- Out of memory

- brain MRI => Big dimension (155 * 240 * 240)

- backpropagation is infeasible

- Out of memory

- DARTS on 3D images

- Stochastic NAS + operation sampling

- Kim et al, Scalable Neural Architecture Search for 3D Medical Image Segmentation, MICCAI 2019

- Scalable NAS is transferrable

- Kim et al, Scalable Neural Architecture Search for 3D Medical Image Segmentation, MICCAI 2019

- torchgpipe [Link]

- A GPipe implementation in PyTorch.

- GPipe is a scalable pipeline parallelism library published by Google Brain

- AutoAugment

- Automated Data Augmentation Strategies Search

- Problem

- Incomplete Data

- Data with uncertainty (wrong labels)

- Inconsistent data (Doctor, Resident Doctor, Internship)

- Insufficient data ( lack of data )

- Incomplete Data

- AutoAug = automated augmentation search

- Cubuk et al, AutoAugment: Learning Augmentation Strategies from Data, CVPR 2019

- AutoAugment for Insufficient Data

- Lim et al, Fast AutoAugment, NIPS 2019

- Find augmentation policies without human intervention

- Lim et al, Fast AutoAugment, NIPS 2019

- Fast Training with Time Constraint

- Automatic Computationally LIght Network Transfer, 2020 [Link]

- 20min with 1GPU + Fast AutoAugment

- Automatic Computationally LIght Network Transfer, 2020 [Link]

- Further Reading

- Liu et al, Auto-DeepLab: Hierarchical Neural Architecture Search for Semantic Image Segmentation, CVPR 2019

- Chen et al, DetNAS: Backbone Search for Object Detection, NIPS 2019

- Cai et al, ProxylessNAS: Direct Neural Architecture Search on Target Task and Hardware, ICLR 2019

- Cubuk et al, RandAugment: Practical automated data augmentation with a reduced search space, arXiv 2019

- Hataya et al, Faster AutoAugment: Learning Augmentation Strategies using Backpropagation, arXiv 2019

- Zhang et al, Adversarial AutoAugment, ICLR 2020

- Introduction